Granicus | Inspection App

Role: UX lead for cross functional team | Granicus Product organization

Responsibility: Leading cross functional team through identifying user challenges and experimentation for solutions to combine two disparate products into one cohesive app

Background:

Who are the users?

- Inspectors for local government jurisdictions

What problem is being solved?

- Improving user experience of inspectors completing an inspection on residential, commercial, or industrial properties to ensure that it meets local government requirements for codes, zoning laws, and other regulations

How?

- Building a new inspection app founded in generative user research

- Phone and tablet devices supported by iOS, Android and Windows systems

Why?

- Currently two apps exist in our product suite that serve this use case

- Both current apps were created by engineers without UX involvement

- Are built in more technical nature than intuitive to users, have usability issues

- The tech stack both apps are built on (Cordova) is failing and needs to be re-built on more future proof tech (React Native)

- Goal is to introduce one inspection app that supports both product user groups

Considerations:

- Both of the existing products came to Granicus through acquisition, so they were built completely separately and only share a common use case

- Both inspection apps currently connect to their own respective back office, desktop product. So in the future with one app, this app will need to seamlessly connect to data across both of the separate back office products

- The products are currently differentiated by jurisdiction size where product A supports larger population jurisdictions and product B supports smaller population jurisdictions

When?

- As soon as possible…eek!

Process

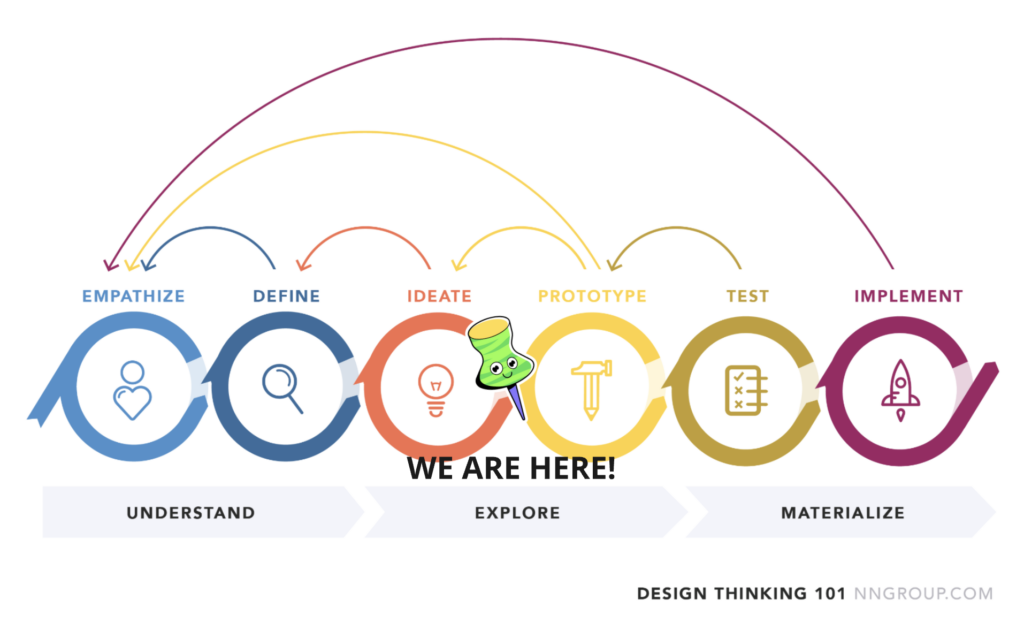

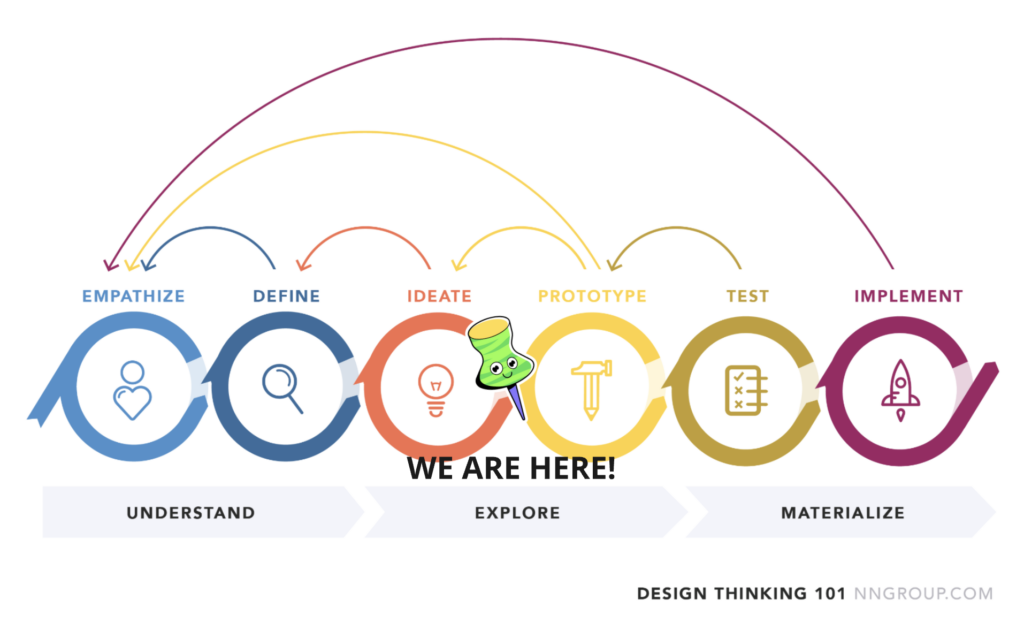

The framework I am following through this project is NNG’s framework for design thinking. I find this structure useful for this project in that it prioritizes iteration and innovation, which will be needed to meet the complexity of combining two very different product experiences (both with their own unique challenges for users) into one app that optimizes the experience and meets the needs of all users.

Though there are many ways to visualize the design thinking process by this definition, I like the iterative nature captured in the visual below as it most accurately represents the overlapping phases and multitude of activities happening for this project at a single point in time.

Design Thinking 101, NNG: nngroup.com/articles/design-thinking

Phase 1: Empathize

Goal

This phase is intended to understand users and to learn everything I possibly can about their experience. This goes beyond their use of the existing apps and this phase cannot be limited to only listening to what users say.

In this phase, I prioritize observing users’ behaviors, actions and processes. I also seek to understand users’ thoughts, mindsets, motivations and desires with more depth. The outcome of the processes within this phase is that I, and the entire project team, have a more holistic understanding of the user and their needs.

Project Kickoff

In this project, our team prioritized an inclusive early kickoff with all involved to ensure that collaboration is foundational to our process.

This had many benefits throughout our project. Most important of these, the team was constantly aligned on user insights and understood how we used these insights to inform decisions because we all participated in discovery calls, research sessions, and more. This level of alignment would be difficult to achieve if we all worked in silos and would mean disconnect at every stage of the process, making collaborative decision-making incredibly difficult. With a shared vision and alignment, collaboration was seamless throughout this entire process

We also reduced risk and cost through this collaboration because we shared an understanding that allowed us to solve the right problems for users, with the right solutions. This translated to efficiency in the later stages of the process where it is more difficult to adapt the plans for the project and effort is a more expensive resource.

I like to visualize this graphic as our three teams: UX, Product, and Engineering. Each of our teams have our responsibilities and actions we own completion of for this project, but our consistent state is the collaboration of all three teams working together.

Discovery Calls

Discovery calls were the first activity that I conducted to learn from users. Our team recruited for a total of 10 calls with users from both Product A and Product B to learn about their roles, their processes and experiences completing inspections and code enforcement cases, and their thoughts and feelings as they are completing their work. These sessions were conducted primarily using very open-ended questioning, but also featured a brief guided tour exercise where users showed us the steps to completing their work via screenshare.

These sessions were incredibly helpful for our team to learn from our users. Below are just a few examples of the many statements we heard from our users during these discovery calls:

“We’re seeing a decrease in the number of inspections despite a steady number of permits. We think this may be attributed to a shift from new construction to quicker upfit inspections in our area.”

“I complete an average of 10-12 inspections per day.”

“My team generally doesn’t like change.”

“Our team stopped using the app because we couldn’t find inspections or permits, we could only find code enforcement cases.”

“Inspectors find it clunky to access detailed permit information on the mobile app. They often have to switch to the web to find relevant details, which is not efficient.”

“It takes a lot of clicks to get through inspections.”

“Our jurisdiction has poor coverage in certain city areas that contribute to sync problems. Inspectors face connectivity issues in the field, leading them to return to the office to complete the sync process on their laptops.”

“Inspectors using iPhones tend to experience more technical issues with the app compared to those using Android devices, including problems with data sync and app functionality.”

This is my listening and thinking face, not a grumpy face haha.

Quantitative Survey

In addition to our discovery calls, I also conducted a survey as part of our process to gather quantitative data about customer usage of app and back office products, devices used, primary actions completed in app, and a space for users to provide open-ended feedback.

There was a short recruiting period, so unfortunately recruiting fell slightly short of the goal defined for statistical significance of 100 survey participants, however a total of 74 participants completed the survey with a near even split across the two products.

In analyzing this survey data, I was able to define some insights around non-functional requirements for the new app and gained further insights from users to compile with the qualitative data from discovery calls.

Phase 2: Define

Goal

The define phase of this project is where I take all that was learned from the activities of the empathize phase and analyze the findings for insights to determine the problems to solve for users. I then use the defined problems to create an outcome (or ‘how might we’ statement) that becomes the vision for what will be accomplished by completing this project.

Discovery Call Analysis

In this activity, I took all that we heard from users in the discovery calls and analyzed this data to determine trends and gain insights.

This started by organizing all of the notes on data from discovery calls into common categories:

- participant/agency information

- information on devices, operating systems, and offline capabilities

- priority actions and content for users

- what users like about the current products

- what problems users are having with the current products

- additional information/other

After analyzing the data within each of these categories, trends emerged that defined key findings for each of these categories. For example:

- A large proportion of users across both products cited using a combination of tools to complete their inspections, not the app alone. This means that the app is not meeting users expectations of what they need in order to complete their work solely within the app.

- The data around users’ primary actions and content within the products confirmed our teams’ assumptions that the primary actions users take in the apps are those that support the task of completing an inspection:

- Referencing permit and inspection information

- Entering results on an inspection

- Taking photos for documentation of an inspection attempt

- Managing inspections by assignment, scheduling, and contacting customers

- Reporting on inspections

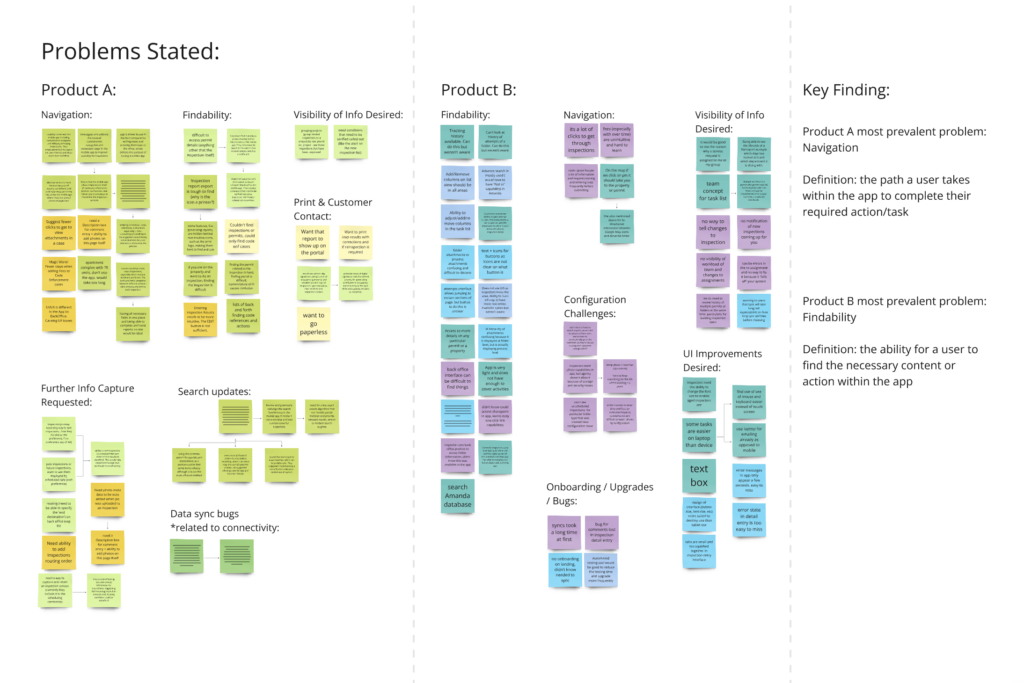

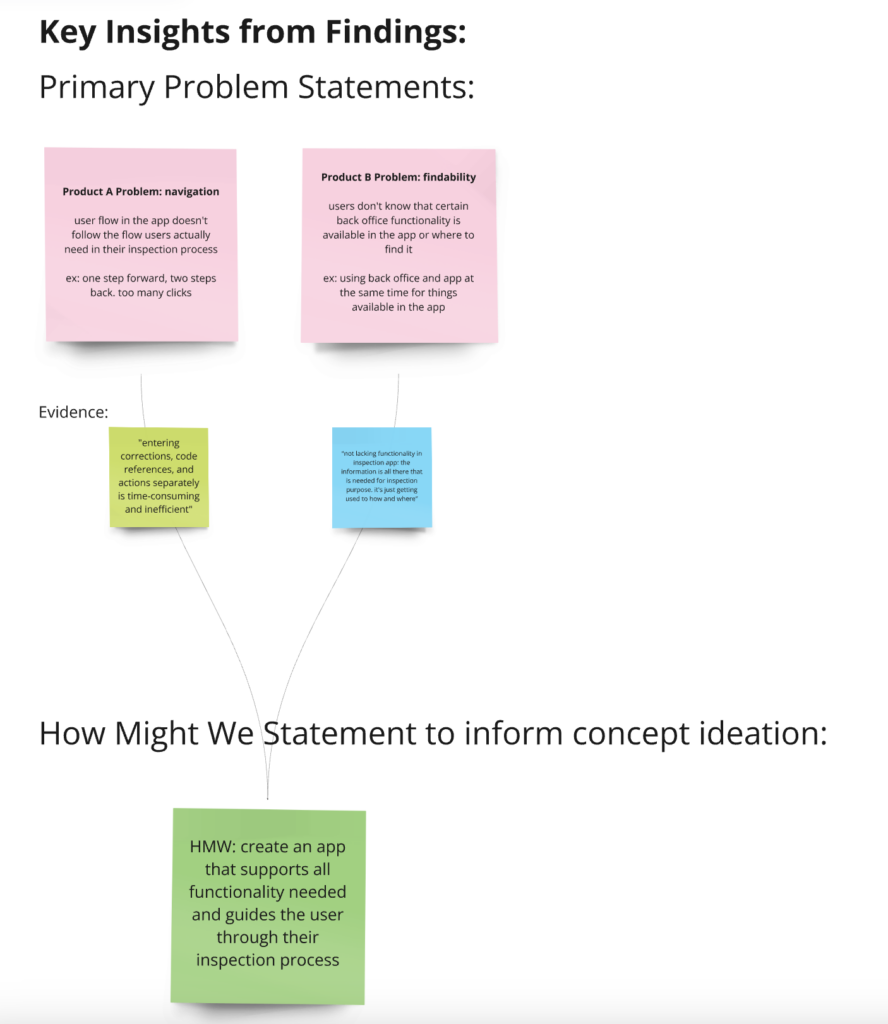

Define Problems and 'How Might We' Statements

The most important insights that came out of analyzing the discovery call data were determining what problems were most important to solve and would have the greatest impact to users. This is an important exercise because as I alluded to previously, there were many data points and problems identified in our conversations with users. I know that it is not likely to solve all of the identified problems immediately when launching this new app, but I want to know how to prioritize these problems and distill them to determine what is the most important problem that can be solved for users first.

Based on a combination of frequency and severity of the feedback received, I defined the primary user problem statements for each product that can be solved in the new app:

Product A Problem: Navigation

I learned that the existing navigation in Product A’s app is not intuitive with the average inspector’s process.

An example of this: one user mentioned having to go multiple clicks into a section of the app to enter one portion of their inspection attempt, then they needed to go back multiple menus and enter a new section with multiple clicks to complete the next task in their workflow.

“The current workflow slows down inspections, especially when multiple violations are found. The back-and-forth navigation between different sections adds unnecessary time to each inspection.”

Multiple users described this problem as cumbersome and time-consuming.

Product B Problem: Findability

I learned that users struggled finding functionality that exists in Product B’s current app, and therefore did not know that it was available.

One user we talked with said, “We’re not lacking functionality in the inspection app: the information is all there that is needed for inspection purposes. It’s just getting used to how and where.”

‘How Might We’ Statement:

Turning around these prioritized problem statements and combining them into the goal for a single app experience, I use an exercise of creating a ‘How Might We’ statement that defines the outcome that can be achieved with the new app. With these insights, I defined the vision by this statement:

‘How might we create an app that supports all functionality needed and guides the user through their inspection process?’

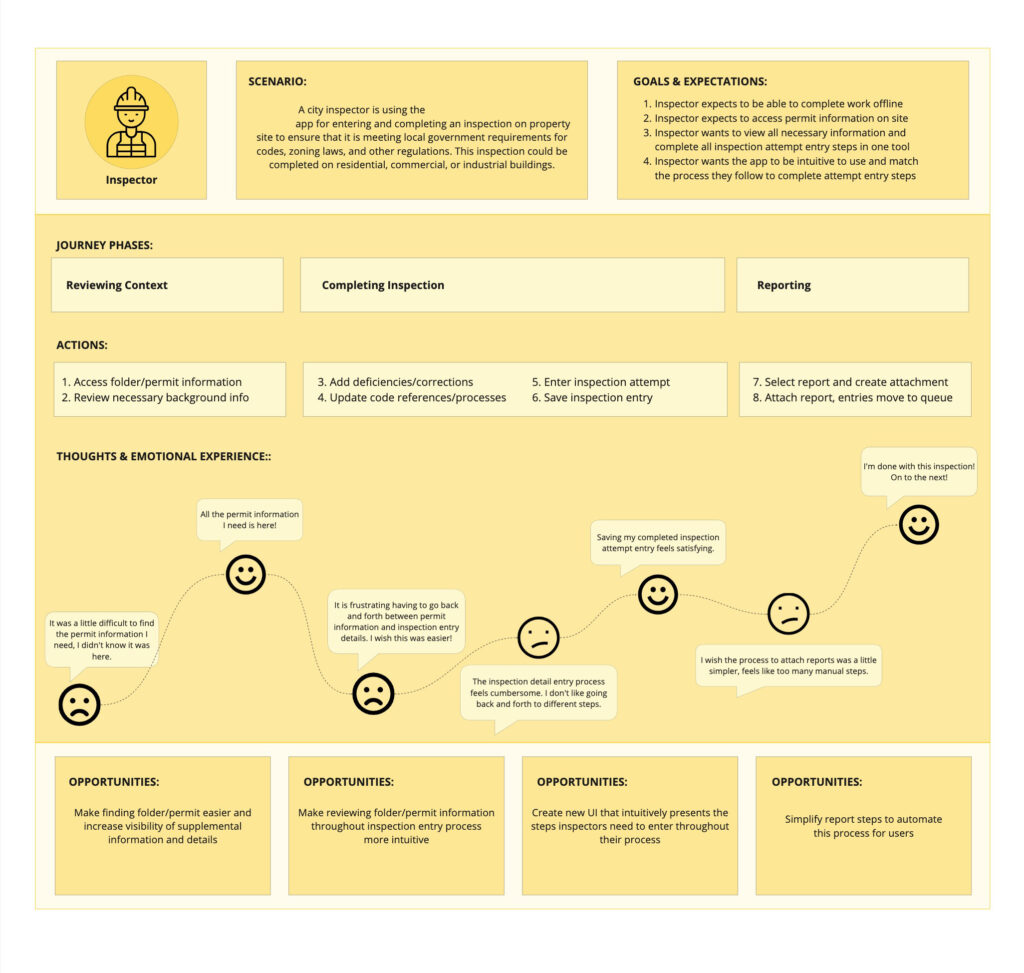

User Journey Mapping

With all of those insights gathered from the data analysis in the last step, I next needed to better understand the user journey so that I could define:

- how the prioritized persona of an inspector works, across both Product A and Product B, and how to create a cohesive user journey in a unified app

- also, where the prioritized problem statements occur in the user journey, to get a better perspective on how to solve those problems

This led me to complete a user journey mapping exercise with the goals of:

- understanding and visualizing the actions users take to complete their workflow

- identifying user pain points across their journey

I mapped the user journey on 3 layers:

- Overall daily workflow of an inspector, looking at the various devices, technologies, human interactions, etc. they use throughout their process (similar to a service blueprint)

- The specific user journey identifying the exact click path for the average inspector within both product apps

- A simplified overarching workflow that creates a combined user journey for the new app that will serve both customers and demonstrated insights and feelings across this journey

A lot was learned from all of these levels of mapping, but looking specifically at the cohesive user journey map, I was able to identify opportunities where the new app could improve user experience. The primary opportunities identified were:

- Simplify finding and reviewing inspection permit information for users while they’re completing inspection tasks

- Creating a new UI that presents the steps to completing an inspector’s work intuitively to their process on-site

- Updating the reporting process to optimize automation where possible

Phase 3: Ideate

Goal

Now that the team is very informed on the user perspective through defining their primary problems and mapping their journey, it’s time to start ideating on solutions to think about what the new app could look like to solve these problems and create a more intuitive experience for users.

In this phase of work I brainstormed ideas, developed concepts, and conducted user research to learn if these concepts would help solve the problems that were identified.

Brainstorm Ideas & Develop Concepts

To determine how we are going to improve the product for users, I start with a blank page and brainstorming crazy ideas.

I like to begin this process by focusing at the high level of identifying ‘What-If” statements. Turning the problems around into solution ideas is a good starting place for ideating on how to solve them. For example:

- Problem: Navigation

- Idea: What if there was an alternative flow for inspectors to enter their attempts that required less back and forth steps

- Problem: Findability

- Idea: What if we used alternative language to describe functionality in the new app? (Example: inbox becomes task list)

Once I have some ideas in written form, it’s time to fill that blank piece of paper with rough sketches of these ideas. I like to follow a similar format to the ‘crazy 8’s’ exercise where I create frames on the page and set a short timer to fill a single frame with a sketch, then move on to the next until all are filled with idea sketches.

From there, I sort the ideas via impact/effort matrix to consider what best solves the problems and what is most technically feasible. These considerations are used to determine which ideas become concepts that will be researched with users.

User Research Plan

I use user research to test the concepts identified in the last step with users to understand if the solution concepts help to solve their challenges.

In this project, I’m also testing a unified user journey and experience for the new app that brings together parts of the experience for Product A, parts of Product B’s experience, but will also feel completely different at various points of the user journey for both user groups.

The first step is creating the research plan. For this, I define the goal and questions of the study, participant profiles, method, test environment/equipment/logistics, and reporting.

As opposed to the conversations with users through discovery calls, in these user research sessions I have a very focused goal in conducting the research and a very specific question I am trying to answer. For this study in particular, I defined one primary question and one sub-question:

- The primary question is the how might we statement: How might we create an app that supports all functionality needed and guides the user through their inspection process?

- Sub-question 1: How do we combine Product A and B apps into a single app experience that supports both user groups?

For the participant profiles, I defined the following guidelines:

- Sample size tentatively = 10. I planned to recruit at least 5 participants per product group and defined this number as statistical significance, based on the generally defined point of diminishing return for qualitative research as defined by NNG.

- Participant criteria: Inspectors (not code enforcement officers, back office administrators, etc.). Current app users of live, active accounts (none in implementation).

- Individual, semi-structured interviews

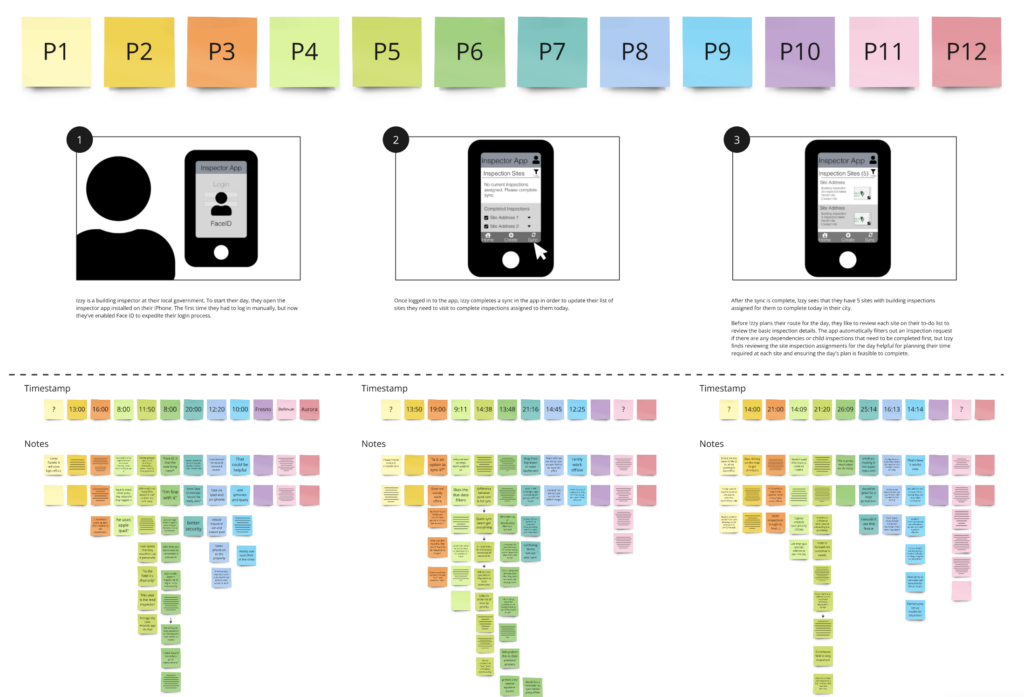

User Research Methodology

The methodology used for this research is called storyboard scenario testing. In this process, I present our participants with a conceptual workflow of an inspector’s process where I go frame-by-frame introducing concepts at each step of the user journey through this scenario and receive feedback on these new concepts and the overall storyboard journey.

As a note, I ensure users understand that these concepts are purely conceptual and that the team has made no guarantee to create this functionality in the product, but that these concepts are presented to understand if they will benefit users’ processes.

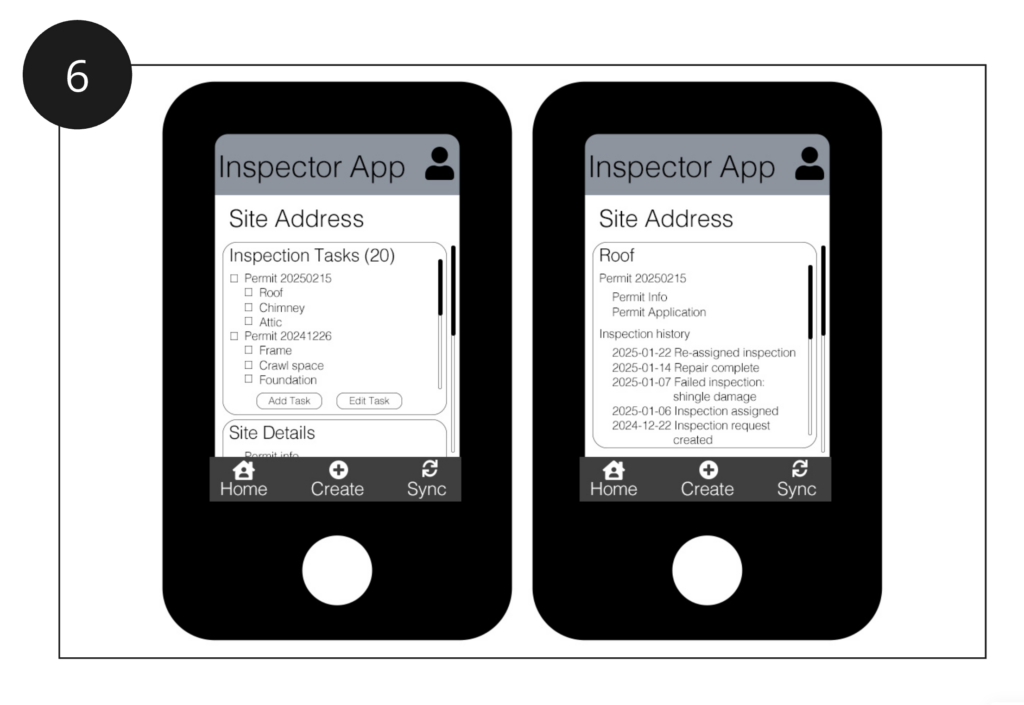

User Research Concepts

In this research, some specific new concepts I presented were:

- an entirely new information architecture that is attempting to present information in a way that better matches the mental model and on-site process of inspectors

- a new method of entering inspection attempt results that is trying to improve efficiency and match the user flow of this process for inspectors

- changing the location and labelling of various information in the inspection interface in an attempt to improve findability

- and many more!

User Research Process

12 participants were recruited to complete the user research sessions (7 for Product A, 5 for Product B).

Particularly with the research methodology implemented for this study, I am always impressed by participants’ ability to think creatively and give informative feedback to highly conceptual ideas! Throughout the 12 sessions conducted, I spoke with inspectors across various inspection disciplines and geographic regions, and received such interesting and helpful feedback from all of their unique perspectives.

Here are some best practices I consider and implement when conducting user research sessions that help the sessions run smoothly and to be the most effective they can possibly be:

- Making the participant feel comfortable through a brief chat and introduction at the beginning.

- Thanking the participant (almost excessively) for taking time out of their day to help us improve the product. This is especially important as our company does not offer financial incentive/compensation for participating in research studies.

- Expectation setting for who is involved in the session, the goal of the session, how long the session will take, what the participant will be asked to do during the session, and the rights and privacy of the participant regarding this session and information shared.

- Noting that honest feedback from the participant is very important and that my feelings will not be hurt by any feedback that might be perceived as negative toward the concepts.

- Remaining neutral in questioning and feedback throughout the session duration.

- Using open-ended questioning at the end of session to get holistic storyboard feedback (example: if you had a magic wand, what if anything would you change about the inspection workflow depicted in this storyboard).

- Leaving space at the end for the participant to ask any questions of the team to provide additional value for their time participating.

User Research Analysis

Analyzing this data allowed me to determine and validate, by once again a combination of frequency and significance, what problems are most important to solve and if the concepts presented were effective in solving these problems.

User Research Outcomes

By conducting this research and gaining insights from this process, I determined that many of the new concepts presented were positive changes for users.

As one example, it was determined that the new information architecture tested does better support users in the new product.

It would not have been possible to come up with this concept without the depth of discovery and research that was done in this project, and it’s likely that existing structures would have likely replicated for the new app that don’t correspond to users’ experience in their workflow.

Also this process identified ways to deliver further value, not only in our first launch of the new app, but in future iterations of the app as well. And in the meantime, the vision that was created through this conceptual model can support conversations with customers about what the future will hold for this product.

Next Steps: Prototype, Beta Test, Implement, Evaluate & Refine

Now that the research data has been analyzed for our experimented solutions, the next steps are to prototype the solutions the users found value in for beta testing. After that beta testing, the build will be adapted based on user feedback as the new product is prepared to launch. Throughout this project, continually evaluating with users and refining based on learnings will be standard processes.

Project Team:

Director Product Management, PCL: Echo Idalski

Product Managers: Raj Sekhon and Sheila Ottersen

Product Owner: Subir Mukherjee

Engineering Leads: PC Choudhury and Peter Madsen